Introduction

In the rapidly evolving digital technology landscape, where speed and responsiveness reign supreme, latency is a pivotal metric that can make or break user experiences. Latency is a critical factor that can directly affect business revenue and sales performance in the digital world.

Latency is becoming increasingly important as more and more applications are moving to the cloud. This is because cloud-based applications are often hosted in data centres far away from the users. As a result, latency can significantly impact the performance of these applications.

What is Latency?

Although latency is a common term in distributed systems, its impact should be addressed.

Definition

If we try to come up with a definition of latency, it would be something like this:

Latency refers to the delay between a user’s action and the system’s response to that action.

Real World Example

Have you ever been to a Starbucks on one of those busy days? These days, you enter Starbucks and join a queue to order your coffee. The queue is usually very quick, and you get to the cashier in no time. This means that the Throughput of the order queue is very high.

But then you order your coffee and join another queue to get your coffee. This queue is usually very slow, and you have to wait for a long time until your coffee is ready and the barista calls your name for collection. This is because a limited number of baristas can only make a limited number of coffees per minute. Obviously, the more throughput the order queue has, the more latency the coffee queue will have.

Just as the queue at Starbucks can experience delays, the latency in a distributed system can be influenced by the number of components and the traffic it handles.

However, it’s important to note that high throughput doesn’t always lead to high latency—it depends on system capacity and design.

Connecting it to Digital World

Imagine the Starbucks queues as a popular e-commerce website during a sale. When you access the site (akin to entering Starbucks), the homepage loads rapidly, showing you all the exciting deals—that’s high throughput. But when you select a product and try to make a purchase, you’re directed to a waiting page (akin to waiting for your coffee) because the payment gateway (the barista) is processing a large volume of orders. Here, despite high throughput in displaying products, there’s noticeable latency when processing the payment, affecting your overall shopping experience.

Why Latency Matters?

Latency matters because it can significantly impact user experience and business revenue. Research and case studies show how latency affects user experience and business revenue. Here are some of them:

In 2006, Google experimented to see how latency affects user experience. They found that a 500ms increase in latency resulted in 20% fewer searches per user.

Amazon

In 2007, Amazon conducted an experiment to see how latency affects sales. They found that a 100ms increase in latency resulted in 1% fewer sales.

Impact on User Experience

Of course, there are questions about the validity of these experiments and how they were conducted. However, the results are still exciting and show how latency can affect user experience and business revenue. Suppose you are a user who went to Amazon deliberately to buy something. In that case, you will likely wait a few seconds to get the desired product. But you are a user browsing the internet and coming across a product on Amazon. In that case, you are more likely to leave the website if it takes more than a few seconds to load.

Moreover, the Amazon experiment is to be believed. In that case, 100ms, 4 times less than a blink of an eye, can be annoying enough to make a user leave the website or mobile app. This illustrates minor delays’ significant, yet often underestimated, impact on user experience.

Factors that Affect Latency

Several factors can affect latency. Here are some of them:

-

Distance - The distance between the client and server is the primary factor in latency. The farther apart the two are, the higher the latency will be.

-

Network bandwidth - The bandwidth available on the network can also affect latency. If the network is congested, the latency will increase.

-

Network hardware - The quality of the network hardware can also affect latency. Older or poorly maintained hardware can introduce delays.

-

Software configuration - The software configuration of the network can also affect latency. If the network is not configured correctly, it may add unnecessary delays.

-

Application design - The application’s design can also affect latency. For example, an application that uses a lot of database queries will have higher latency than an application that does not.

-

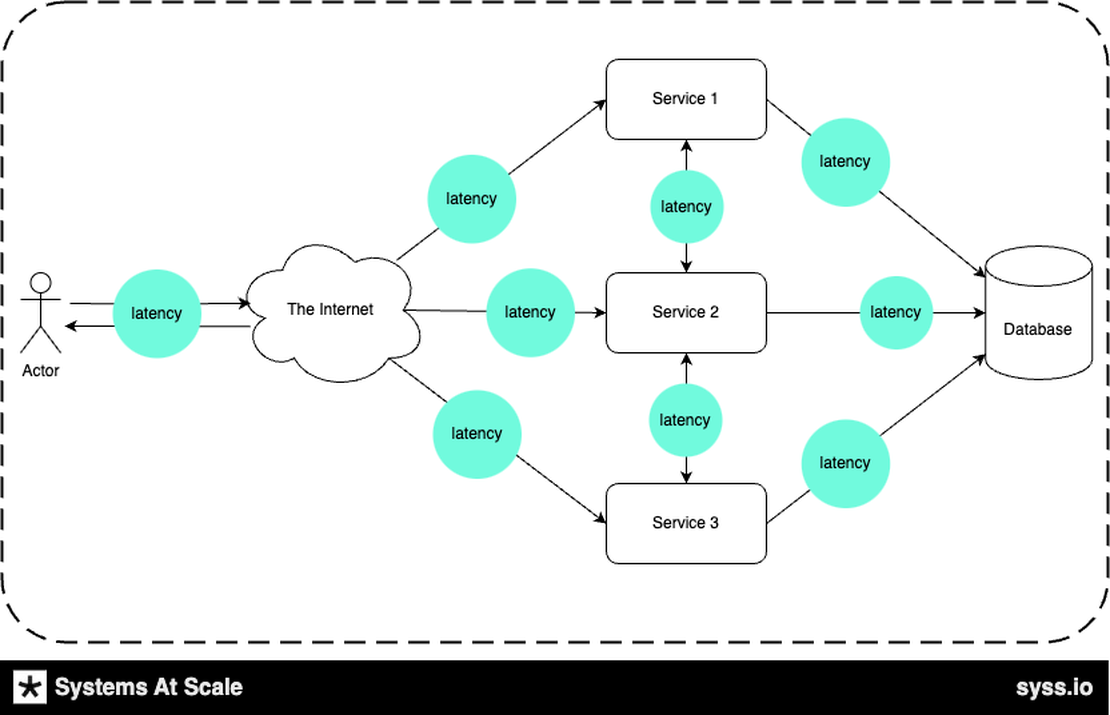

System design - The software architecture of an application can also affect latency. For example, an application that uses a microservices architecture will have higher latency than one that uses a monolithic architecture. This is because microservices architectures are more distributed, which means there is more communication between the different components of the application.

How to Measure Latency

There are several ways to measure latency. Here are two of the most common methods:

- Round trip time (RTT): This is when a packet travels from the client to the server and back.

- Time to first byte (TTFB): This is the time it takes for the first byte of data to be received from the server.

Several tools can be used to measure latency. Some of the most common tools include:

- The ping command: This command can measure the round trip time between a client and a server.

- The traceroute command: This command can trace a packet’s path through the network.

- The Network Quality Analysis (NQA) tool: This tool can be used to measure the performance of a network, including latency

How to Improve Latency

There are several ways to improve latency. Here are some of the most common methods:

-

Reduce the distance between the client and server: This can be done using a content delivery network (CDN) or hosting the application closer to the users.

- Explanation: Data packets have to travel physical distances, which takes time. Reducing this distance can help decrease the time it takes for data to travel.

- Example: If your main user base is in Europe but your servers are in North America, consider setting up a server in Europe or using a CDN with European endpoints.

-

Increase the network bandwidth: This can be done by upgrading the network infrastructure or using a dedicated line.

- Explanation: Bandwidth determines how much data can be sent over a network at a given time. By increasing bandwidth, you can send more data simultaneously, potentially reducing latency.

- Example: If your website experiences high traffic during sales, consider upgrading your hosting plan to accommodate the spike in users.

-

Use high-quality network hardware: This can help to reduce delays and improve performance.

- Explanation: Better hardware can process and forward data more efficiently, reducing potential bottlenecks.

- Example: Replacing old routers with modern ones that support faster data transmission standards.

-

Optimize the software configuration: This can be done by ensuring the network is configured correctly, and the application is designed to minimize latency.

- Explanation: How software is configured, including network settings and application structures, can influence how fast data is processed and sent.

- Example: Implementing efficient load balancers can distribute incoming requests more effectively, ensuring no single server is overwhelmed.

-

Use a caching mechanism: This can help reduce the times data needs to be retrieved from the server, improving performance.

- Explanation: Caching stores frequently accessed data temporarily so that it can be retrieved without needing to be fetched from the main server every time.

- Example: For a website, caching static assets like images or scripts can significantly speed up page load times for returning users.

-

Use tracing: Tracing is a technique for tracking data flow through a distributed system. It can be used to identify the source of latency and to troubleshoot performance problems.

- Explanation: Tracing provides insights into how data moves through a system, revealing potential slowdowns or inefficiencies.

- Example: By using tools like OpenTelemetry, developers can identify if a particular microservice in their application is taking too long to respond, and then focus on optimizing that specific service.

The trade-offs

There are several trade-offs to consider when trying to improve latency. Some of the most essential trade-offs include:

- Cost: Reducing the distance between the client and server will improve latency and increase the cost.

- Reliability: Increasing the network bandwidth will improve latency but may also reduce the network’s reliability.

- Complexity: Some techniques for improving latency, such as caching, can add complexity to the system.

Conclusion

Latency is an essential factor to consider when designing and deploying a distributed system. By understanding the factors that affect latency and using the right tools and techniques, it is possible to improve latency and deliver a better user experience.

Further Reading

Below are some recommended resources for additional information: